As the demand for faster and more scalable software solutions increases, IT teams are constantly exploring new strategies to streamline their processes. One such strategy that has been gaining immense popularity is Kubernetes – a powerful tool that surpasses the capabilities of traditional containerization methods. In our latest article, we explore how Kubernetes is paving the way for microservices architectures, multi-cloud solutions and much more, revolutionizing the world of application development as we know it.

Introduction to Kubernetes

Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications. It was originally designed by Google, and is now maintained by the Cloud Native Computing Foundation(CNCF).

Kubernetes is often used as a platform for running microservices-based architectures. It can run on multiple cloud providers, making it a popular choice for multi-cloud solutions. Kubernetes is also becoming increasingly popular for other types of workloads, such as batch processing and big data applications.

In this blog article, we’ll give you an introduction to Kubernetes. We’ll cover its history, how it works, and some of the key features that make it such a powerful tool.

History of Kubernetes

Kubernetes was created by Google in 2014 as an open-source system for automating the deployment and management of containerized applications. It is built upon the foundations of Google’s 15 years of experience running containers in production, and is inspired by Google’s internal container orchestration system, Borg.

Since its launch, Kubernetes has rapidly become the de facto standard for container orchestration. It is now a hosted project under the Cloud Native Computing Foundation (CNCF), and is used by companies of all sizes, from startups to global enterprises, to manage their containerized applications at scale.

Kubernetes has continued to evolve at a rapid pace, with new features and capabilities being added with each release. Some of the most notable recent additions include support for multi-tenancy and autoscaling, improved networking and storage options, and enhanced security features.

Looking to the future, Kubernetes is set to continue its role as the leading platform for container orchestration. With its broad ecosystem of partners and add-on projects, Kubernetes is paving the way for ever more complex microservices architectures and multi-cloud solutions.

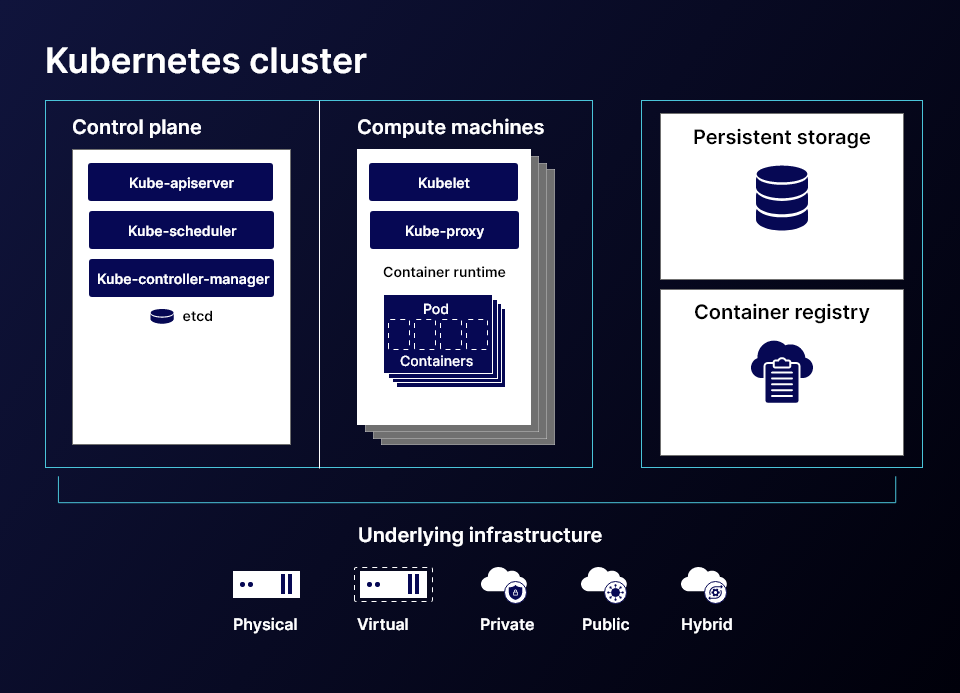

Components of Kubernetes

Kubernetes is a portable, extensible open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a vast, rapidly growing ecosystem.

Kubernetes supports a range of compute, storage, and network plugins to accommodate diverse workloads. For example, Kubernetes can schedule containers on top of physical or virtual machines (VMs), it can provide networking between containers on different nodes using overlay networks such as Weave Net or Flannel, and it can leverage local storage solutions such as cloud block storage.

The following are the major components of Kubernetes:

1. Master Node: This is the entry and control point for all administrative tasks, making sure that all requests to the cluster are being handled and forwarded properly. It is responsible for maintaining the system’s desired state.

2. Node: A node is a worker machine that holds containers managed by Kubernetes. Nodes communicate with the master node by sending information about their state, such as available resources or execution failures, and receive commands from the master node to run new containers or adjust existing ones.

3. Kubelet: The kubelet is an agent running on each node in the cluster that takes care of managing container workloads on that particular node. It communicates with both the master node and any other nodes in the cluster, ensuring their desired states are met and coordinating resources accordingly.

4. etcd: etcd is a distributed key-value store used as a highly-available and consistent backup of all system configurations and statuses across nodes in a cluster. This way, if something ever goes wrong with one of them, they can just return to a known “good” state using this store’s contents as a reference.

5. Kubeadm: This component is responsible for setting up the nodes and configuring the network between them, making sure everything is ready to manage containerized workloads in the cluster. It packs all of this functionality into a single binary that you can use to bootstrap your cluster.

6. Container Runtime: Despite Kubernetes technically being able to manage any runtime environment, it is known mostly for its support of Docker containers, with most deployments running some sort of Docker mechanics in the background. It is possible, however, to use other runtimes such as CoreOS rkt or Mesos containerizer if needed

What are Clusters?

Clusters are the building blocks of Kubernetes architecture. Each cluster consists of a master node and typically a number of worker nodes each of which is a virtual or physical machine depending on the cluster.

The master node takes care through the control plane and sets states of the cluster and automates cluster deployment and allocation of computing resources. Developers can use the kubectl command-line interface or the Kubernetes API to set the parameters that define the desired state in the master node.

Worker node contains the tool to manage the containers that run the applications. They work upon receiving orders from the master node.

Advantages of Using Kubernetes for Enterprise IT

Organizations are turning to Kubernetes to help them accelerate their digital transformation initiatives. Some of the benefits of using Kubernetes for enterprise IT are:

Accelerated application development and deployment: Kubernetes can help organizations rapidly deploy and scale applications. By using containers, Kubernetes can package and deploy applications more quickly than traditional virtual machines.

Enhanced application availability and resilience: Kubernetes enables organizations to create highly-available applications by replicating services across multiple nodes. In the event of node or server failures, Kubernetes can automatically restart services on other nodes to keep the application running.

Improved developer productivity: With Kubernetes, developers can focus on coding rather than managing infrastructure. Kubernetes automates many of the tasks associated with deploying and managing containerized applications, such as scaling, load balancing, and rolling updates.

Unified management of hybrid and multi-cloud deployments: Organizations can use Kubernetes to manage workloads deployed across multiple clouds, including public clouds, private clouds, and edge locations. This gives organizations greater flexibility in how they deploy their applications.

Kubernetes is becoming increasingly popular as enterprises strive to improve their agility and speed of delivery.

Key Players in the Kubernetes Ecosystem

Kubernetes is available as a managed service from all major cloud providers, and its popularity is only increasing.

There are a growing number of vendors and startups that offer products and services for Kubernetes. Some of the key players in the Kubernetes ecosystem are:

AWS EKS: Amazon offers the Elastic Kubernetes Service (EKS). It’s a fully managed service that makes it easy to deploy and manage applications on Kubernetes.

Red Hat: Red Hat is a major contributor to the Kubernetes project. They offer a managed Kubernetes service called OpenShift on their public cloud. OpenShift Dedicated is also available on Amazon Web Services (AWS).

Azure AKS: Azure Kubernetes Service from Microsoft is a kubernetes cluster deployment service that tends to offload the workload from the Azure. If the applications are already running on a Azure cloud then AKS seamlessly integrates with other Azure services.

GKE: Google is the creator of Kubernetes and still maintains a significant presence in the project. Google Kubernetes Engine (GKE) is a managed Kubernetes service that runs on Google Cloud Platform (GCP).

GitLab: GitLab provides comprehensive tools for managing the continuous integration, delivery, and deployment of containers. They also offer deep Kubernetes integration to simplify these processes.

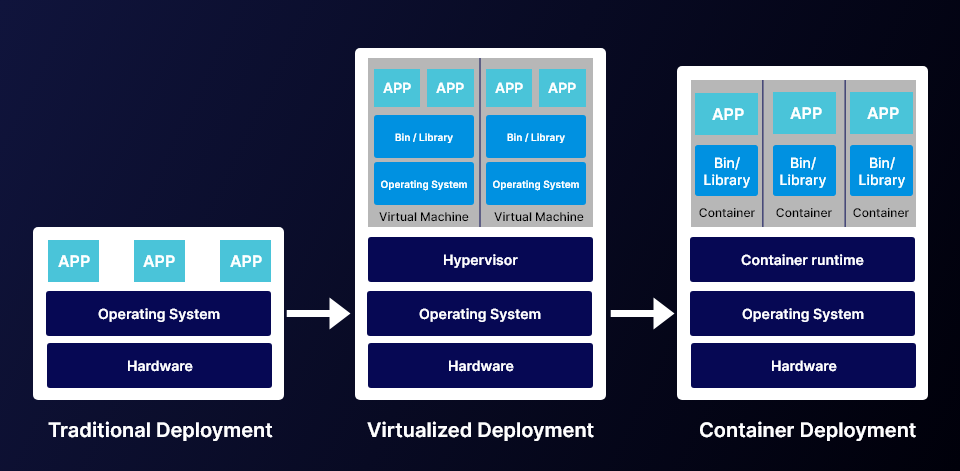

Transitioning to Containerization & Microservices Architectures, Multi-Cloud Solutions

Transitioning to containerization and microservices architectures can be a daunting task for any organization, but Kubernetes is here to help. By simplifying the management of containers and providing a unified platform for running them, Kubernetes makes it easy to transition your existing applications and services to a microservices architecture.

In addition, Kubernetes can also help you take advantage of multi-cloud solutions, allowing you to run your applications on any combination of public and private clouds.

Conclusion

Kubernetes is one of the primary tools for developers today who are looking to create a distributed and scalable system. Its container-orchestration capabilities allow it to be used in multi-cloud solutions and microservices architectures, making it an invaluable tool for businesses and individuals alike. Furthermore, its open source model makes it available for everyone to use and learn quickly. With all these features combined, Kubernetes has become the go-to choice when selecting how to deploy any application on a cloud or cross-platform environment.