Organizations today are heavily inclined to data for their business decisions. Yet, the distinction between good and bad data often gets blurred in the excitement to implement the latest AI and analytics tools. Data quality can be defined as the degree to which data meets a company’s expectations of accuracy, validity, completeness, and consistency. To ensure that their data is up to par for analytics and AI initiatives enterprises should begin with segregating good data from bad data.

Good Data vs. Bad Data: Understanding the Difference

Good Data is accurate, complete, consistent, timely, and relevant. It’s like having a perfectly brewed cup of coffee – it energizes and propels your initiative forward. Just as the right tools can streamline your operations and enhance productivity, good data sets the foundation of your decision-making processes with precision and reliability. It ensures that your analytics are spot-on, your AI models are trained on the right patterns, and your strategic decisions are grounded in reality. Good data is not just beneficial; it’s essential. It reduces errors, increases efficiency, and drives success across the enterprise.

Bad Data, on the other hand, is erroneous, incomplete, inconsistent, outdated, and irrelevant. When your data is inaccurate, it leads to faulty analyses, misguided strategies, and ultimately poor decisions. Incomplete data means you are working with partial truths, which can be more dangerous than no data at all. Inconsistent data causes confusion and errors, as different parts of your system might tell different stories. Outdated data can mislead you, basing your decisions on scenarios that no longer exist. Irrelevant data clutters your systems and wastes valuable resources. All these issues collectively lead to wasted time, increased costs, and missed opportunities.

In the world of AI and data analytics, the quality of your data is the backbone of your success. It determines whether your initiatives will thrive or falter. Investing in good data practices is not just a technical necessity but a strategic imperative.

How Bad Data Quality Can be Really Bad?

Bad data can lead to incorrect insights, poor decision-making, and costly mistakes. Here are a few examples:

Medical Misdiagnosis: Imagine a hospital relying on outdated patient records. A patient could receive the wrong treatment based on inaccurate data, potentially endangering their life.

Poor Inventory Visibility: A retail company using erroneous inventory data might overstock unpopular items while running out of bestsellers, leading to lost sales and unhappy customers.

Incorrect Financial Forecasting: Banks relying on inconsistent financial data might make incorrect predictions, affecting everything from loan approvals to stock investments, ultimately impacting their bottom line.

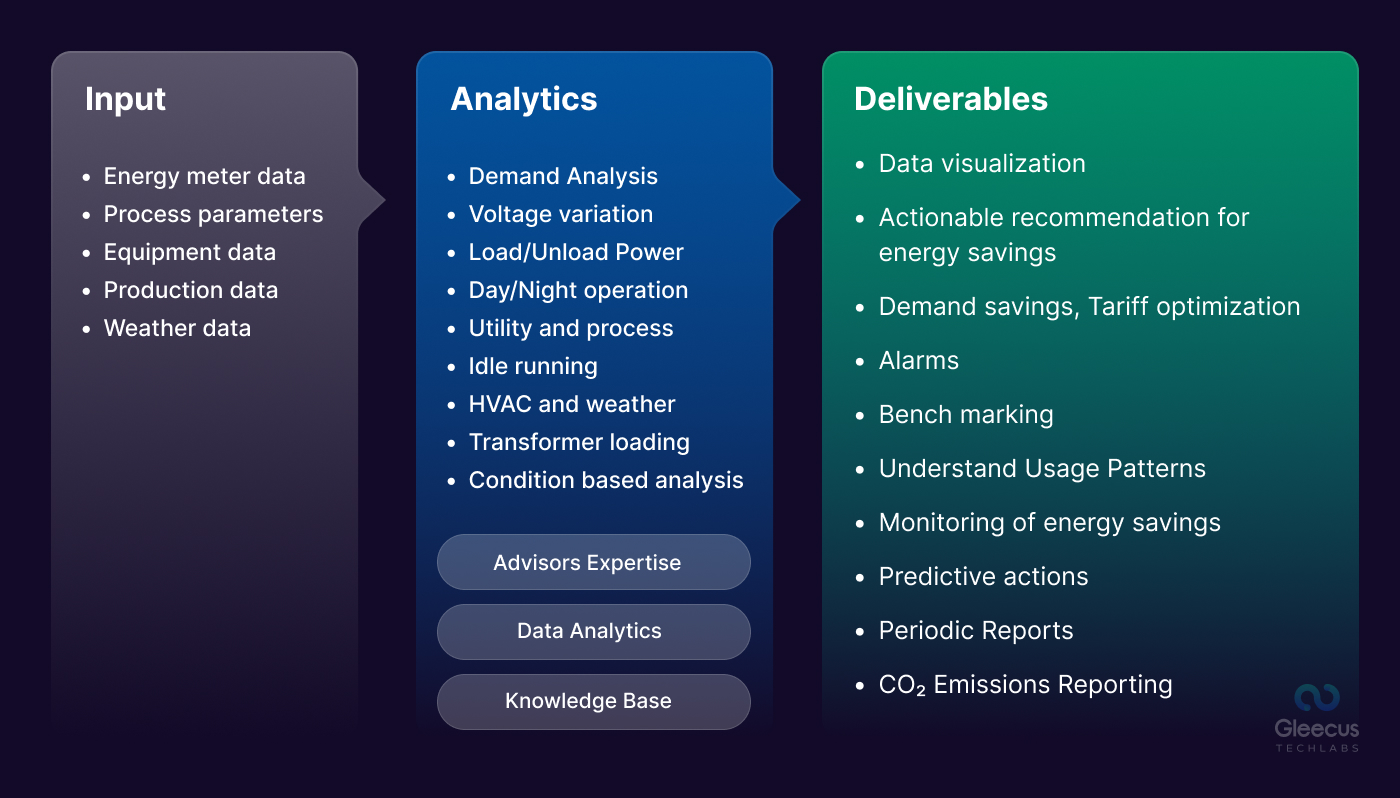

Poor Energy Audit: An energy company using inaccurate consumption data might overestimate demand, leading to excess production and wasted resources. Conversely, underestimating demand could result in blackouts and customer dissatisfaction.

Manufacturing Defects: A manufacturing firm using incorrect data about material properties or machine calibration settings might produce defective products, leading to costly recalls and damage to the brand’s reputation.

For instance, a famous case is the Toyota recall in 2009-2010, where errors in data management contributed to the production of faulty accelerators, leading to massive recalls and financial loss.

Transportation and Logistics Delays: A logistics company using faulty data for route planning might experience delays, increased fuel consumption, and higher operational costs. For example, a leading shipping company once faced significant delays and increased costs due to relying on outdated and incorrect traffic data, leading to missed deliveries and unhappy customers.

Marketing Campaign Failures: A marketing team working with bad customer data might target the wrong audience, leading to poor campaign performance and wasted advertising budget. A notable incident occurred with a major retailer that mistakenly targeted a large portion of their customer base with promotions for products they had no interest in, resulting in low engagement and wasted resources.

Government Policy Missteps: Governments relying on inaccurate census data might misallocate resources or design ineffective policies. For instance, a city government once used outdated population data to plan public transportation routes, leading to overcrowded buses in some areas and underutilized services in others, causing public dissatisfaction and inefficiency.

A well-known example of bad data quality leading to severe consequences is the Wells Fargo account fraud scandal. Employees, under pressure to meet sales targets, created millions of fake accounts. Due to poor data quality and oversight, these fraudulent activities went unnoticed for years. The fallout included substantial fines, loss of customer trust, and severe damage to the company’s reputation. This scandal highlighted the critical need for accurate data and robust data governance to prevent misuse and ensure transparency.

Why Data Quality is the Backbone of AI and Analytics?

Data Quality: The Foundation of Reliable Insights

High-quality data is essential for generating reliable insights. Data quality issues such as inaccuracies, missing values, and inconsistencies can lead to incorrect conclusions and misguided decisions. Ensuring data quality involves validating the accuracy, completeness, consistency, and relevance of data, which forms the bedrock of trustworthy analytics.

Accuracy: Accurate data reflects the true values and characteristics of the variables being measured. Inaccurate data can distort findings and lead to faulty interpretations.

Completeness: Complete data sets contain all necessary information without missing values, enabling comprehensive analysis.

Consistency: Consistent data maintains uniformity across various datasets and systems, preventing conflicting results and ensuring seamless integration.

Ensuring Fair and Effective AI Models

AI models are only as good as the data they are trained on. High-quality data is crucial for developing fair and effective AI models that can generalize well to new, unseen data. Poor data quality can introduce biases, reduce model performance, and compromise the integrity of AI-driven decisions.

Bias Reduction: High-quality data minimizes biases, leading to fairer and more equitable AI models. Biased data can result in discriminatory outcomes, which can be harmful and unethical.

Model Performance: Quality data enhances the performance of AI models, improving accuracy, precision, recall, and other performance metrics. Poor data quality can lead to overfitting, underfitting, and other issues that degrade model performance.

Generalization: High-quality data ensures that AI models can generalize well to new data, maintaining their effectiveness in real-world applications.

Making Well-Informed Decisions

Decision-making processes rely heavily on data-driven insights. High-quality data enables organizations to make well-informed decisions that are based on accurate and relevant information, leading to better outcomes and competitive advantages.

Strategic Planning: Reliable data supports strategic planning and forecasting, allowing organizations to anticipate market trends, customer behavior, and other critical factors.

Operational Efficiency: Quality data enhances operational efficiency by enabling accurate process optimization, resource allocation, and performance monitoring.

Risk Management: High-quality data supports effective risk management by providing accurate information for identifying, assessing, and mitigating risks.

Benefits of High-Quality Data in AI and Analytics

Enhanced Trust and Credibility: Stakeholders are more likely to trust and rely on analytics and AI-driven insights when they are based on high-quality data.

Improved Customer Satisfaction: High-quality data enables better understanding of customer needs and preferences, leading to more personalized and effective customer experiences.

Regulatory Compliance: Maintaining data quality helps organizations comply with regulatory requirements and standards, avoiding legal and financial repercussions.

Competitive Advantage: Organizations with high-quality data can leverage advanced analytics and AI to gain a competitive edge in the market.

Reach out to us today to learn how we can help you in extracting high quality data from your unsorted enterprise data.

The Impact of Biases and Wrong Analysis

Bad data quality doesn’t just lead to errors; it can also introduce biases and distortions in your analysis. When the data used to train AI models is flawed, the models can perpetuate and even amplify these biases, leading to unfair and potentially harmful outcomes. Here are some detailed examples to illustrate this:

Facial Recognition Bias: A classic example is facial recognition software that performs poorly on non-white faces because the training data lacked diversity. This issue came to light when studies revealed that several commercially available facial recognition systems had significantly higher error rates for darker-skinned individuals compared to lighter-skinned ones. This bias can lead to wrongful identifications and has serious implications for law enforcement and surveillance, potentially exacerbating racial profiling.

Hiring Algorithms: Many companies have turned to AI to streamline their hiring processes. However, if the training data used for these algorithms is biased, the results can be discriminatory. For instance, an AI hiring tool developed by a major tech company was found to favor male candidates over female ones because the training data was based on resumes submitted over a decade, during which most applicants were male. This led to the algorithm downgrading resumes that included the word “women’s,” as in “women’s chess club captain,” resulting in gender bias.

Loan Approval Systems: Financial institutions use AI to evaluate loan applications. If the historical data used to train these models contains biases—such as socioeconomic biases against certain racial or ethnic groups—the AI can perpetuate these biases. For example, if the data shows that a particular group has historically had higher default rates (perhaps due to systemic inequalities), the AI might unfairly deny loans to individuals from that group, regardless of their current financial situation.

Healthcare Algorithms: In healthcare, AI is used to predict patient outcomes and allocate resources. However, biased data can lead to unequal treatment. One well-documented case involved an algorithm used to predict which patients would benefit from additional healthcare services. The algorithm was found to be biased against black patients because it used healthcare costs as a proxy for health needs. Since black patients often have lower healthcare expenditures due to systemic barriers, the algorithm underestimated their needs, leading to inequitable care.

Criminal Justice: Predictive policing algorithms aim to forecast where crimes are likely to occur and who might commit them. If the training data is biased, these algorithms can reinforce existing prejudices. For instance, if certain neighborhoods have been over-policed historically, the data will reflect higher crime rates in those areas, leading the AI to suggest increased policing there, perpetuating a cycle of discrimination and surveillance.

Product Recommendations: E-commerce platforms use AI to recommend products to users. If the data reflects biased purchasing patterns, the recommendations can reinforce stereotypes. For example, if the data shows that women predominantly buy certain types of products, the AI might disproportionately suggest those products to female users, reinforcing gender stereotypes.

6 Ways to Mitigate Data Quality Challenges

Ensuring good data quality requires a proactive approach. Here are some strategies to consider:

1. Data Governance Framework

Establish a robust data governance framework with clear policies and procedures. This includes data ownership, stewardship, and accountability. A well-defined framework ensures that data is managed as a valuable asset. It should outline roles and responsibilities, data standards, and guidelines for data usage and access. This creates a culture of data quality and ensures that everyone in the organization understands their role in maintaining high data standards.

2. Regular Data Audits

Conduct regular data audits to identify and rectify inaccuracies and inconsistencies. Think of it as a routine health check-up for your data. These audits should review data for accuracy, completeness, consistency, and timeliness. Regular audits help catch errors early, preventing them from affecting larger datasets and analyses. By scheduling periodic audits, you ensure continuous data integrity and reliability.

3. Data Cleaning and Enrichment

Implement data cleaning processes to eliminate errors and enhance data quality. This involves removing duplicate records, correcting inaccuracies, and filling in missing information. Enrich your data with relevant external sources to improve completeness and relevance. For example, integrating third-party data can provide additional context and insights, making your data more robust and valuable for analysis.

4. Automated Data Quality Tools

Utilize automated tools for data profiling, validation, and monitoring. These tools can catch errors before they propagate through your systems. Automated tools can continuously scan for anomalies, inconsistencies, and inaccuracies, providing real-time alerts and facilitating prompt corrective actions. Implementing these tools ensures a higher degree of accuracy and consistency across your data pipelines.

5. Training and Awareness

Train employees on the importance of data quality and how to maintain it. After all, even the best tools can’t fix human negligence. Training programs should cover data quality principles, best practices, and the impact of poor data quality. By raising awareness and providing practical guidance, you empower your staff to contribute actively to maintaining high data standards.

6. Bias Detection

Implement tools and methodologies for detecting and addressing biases in your data. Bias detection tools can analyze data for signs of unfair representation or discrimination, helping to identify and mitigate biases early in the data processing pipeline. Regularly reviewing

Monitoring Data Quality Across Pipelines

Importance of Continuous Monitoring

Maintaining high data quality is crucial for the success of any data-driven initiative. Data quality issues can significantly impact analytics, machine learning models, and overall business decision-making processes. Continuous monitoring of data quality helps in promptly detecting and addressing issues, ensuring that the data remains reliable and accurate.

Real-Time Monitoring Solutions

Real-time monitoring solutions involve the implementation of tools and systems that can track the quality of data as it flows through the pipeline. These solutions are designed to identify anomalies and trigger alerts when issues are detected, enabling immediate response and remediation.

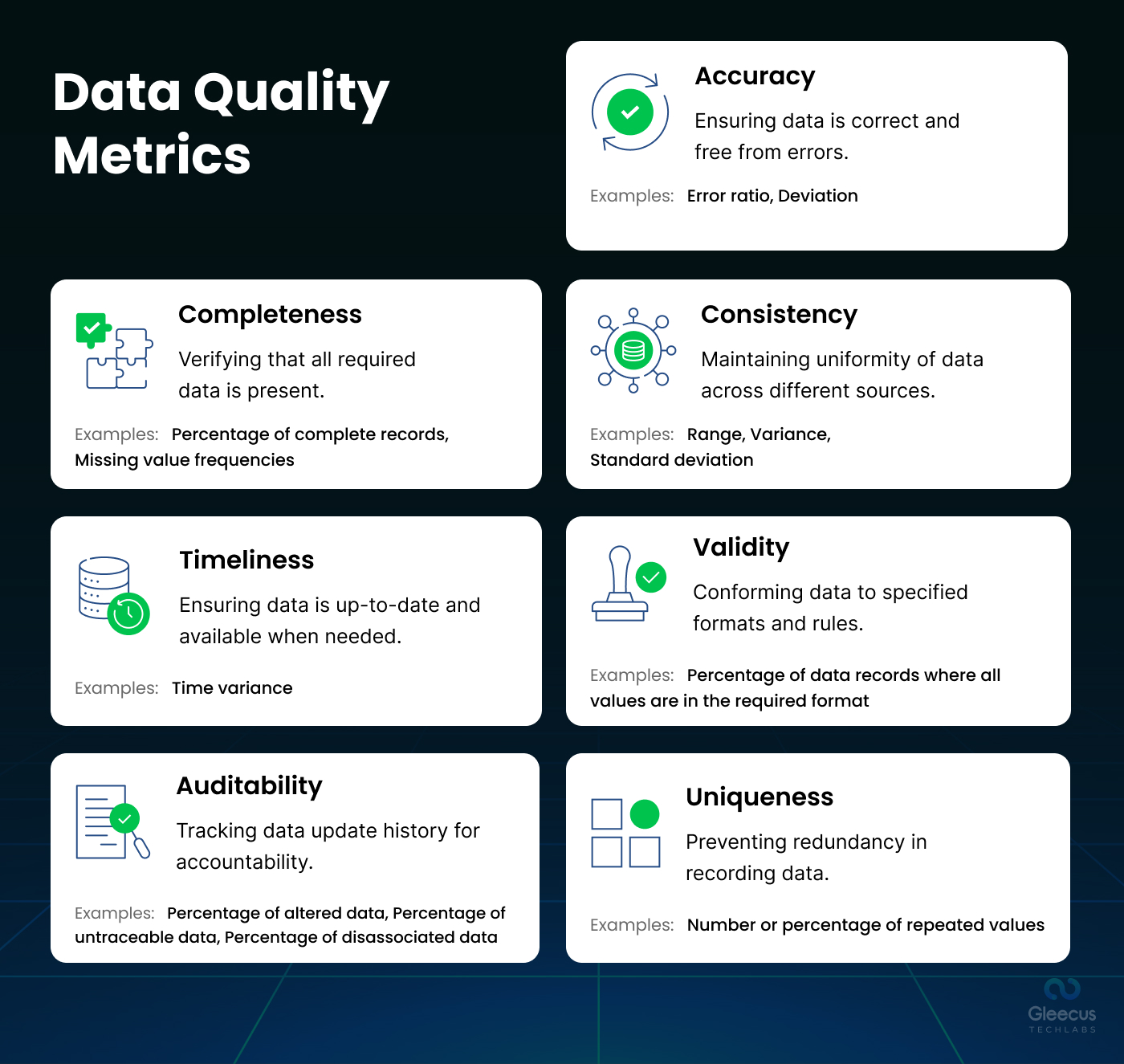

1. Data Quality Metrics

- Accuracy: Accuracy means that a measured value accurately reflects the true value, free from errors like outdated information, redundancies, and typos. To ensure the highest quality of your data, focus on continually improving its accuracy as your datasets continue to grow in volume.

- Completeness: Ensures data records are complete and contain enough information for accurate analysis, it’s important to track this data quality metric by identifying any fields with missing or incomplete values. Comprehensive data entries are essential for maintaining a high-quality dataset.

- Consistency: This implies data in your databases should be free from contradictions, ensuring that values from different datasets align correctly. To verify consistency, you might establish and adhere to specific data rules.

- Timeliness: Timeliness measures the delay between when an event occurs and when it is recorded. This metric ensures that your data remains accurate, accessible, and available for your analytics and AI initiatives.

- Validity: This ensures the consistency and correctness in format of data aligned to the established formatting rules. Tracking validity involves the percentage of format error for a data item against its total number of appearances across the database.

- Auditability: Auditability is the ability to track changes in data. Determining auditability involves accounting the number of fields where you cannot track the number of changes, time, and editor.

- Uniqueness: This ensures data is recorded only once without redundancy. Tracking uniqueness is essential to prevent double data entry.

2. Anomaly Detection

- Statistical Methods: Use statistical techniques to identify outliers and unexpected patterns in the data.

- Machine Learning Models: Implement machine learning models to detect complex anomalies that might not be evident through simple statistical methods.

- Rule-Based Systems: Define rules and thresholds for data quality metrics, triggering alerts when these thresholds are breached.

3. Alerting Mechanisms

- Real-Time Alerts: Implement systems that send immediate notifications via email, SMS, or other messaging platforms when anomalies are detected.

- Dashboards: Use real-time dashboards to provide a visual representation of data quality metrics and anomalies.

- Logs and Reports: Maintain detailed logs and generate regular reports for auditing and historical analysis.

Conclusion

Embarking on data analytics and AI initiatives without ensuring data quality is like building a product without design. It might look impressive initially, but it won’t stand the test of time. By prioritizing data quality, enterprises can unlock the true potential of their data, driving innovation, efficiency, and success.

Remember, in the world of data, quality trumps quantity. So, let’s set the supply chain of good quality data for our AI and analytics engines.