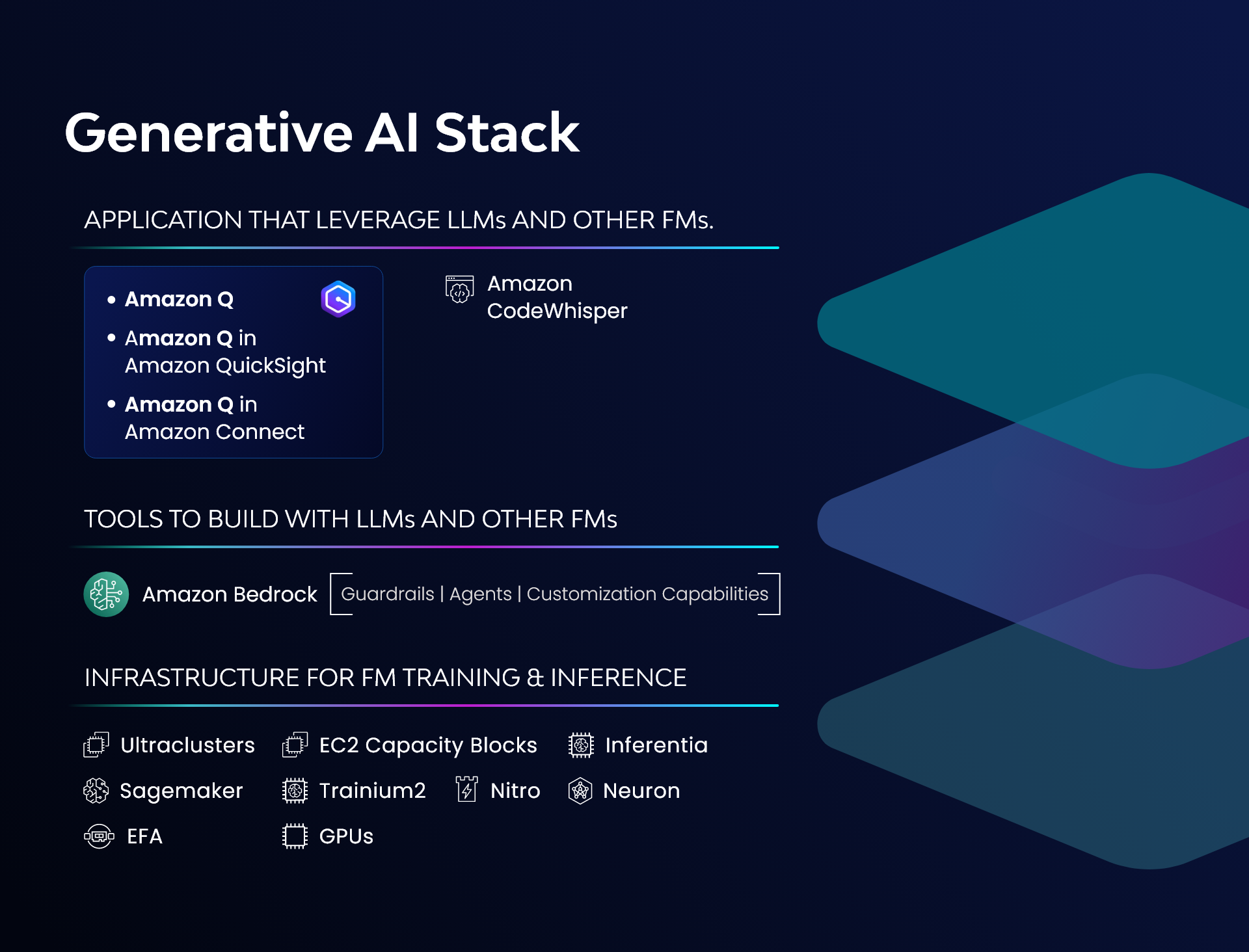

At the recently concluded AWS re:Invent 2023 Amazon has expressed its prowess to transform the cloud practices through the launch of a number of generative AI solutions at various layers of the cloud infrastructure. The potential of generative AI to revolutionize customer experiences is increasingly evident from the plethora of features these solutions offer.

Anthropic and other leading AI firms have chosen AWS as their go-to cloud provider for crucial workloads and model training. Their preference for AWS stems from the company’s dedication to making cutting-edge technology accessible to customers of all scales and technical proficiencies. AWS continues its tradition of democratizing complex and transformative technologies, making them approachable and feasible for businesses of varying sizes and capabilities. We will now take a look at how AWS attempts to address the day to day challenges of cloud operations at various layers of its generative AI stack through its latest introductions at AWS re:Invent 2023.

Bottom Layer of The Stack: Infrastructure

At the layer of AWS’s Generative AI stack lies the infrastructure provisioning encompassing compute, networking, frameworks, and essential services vital for training and running LLMs and other foundational models (FMs). AWS remains at the forefront of innovation, continuously evolving to provide the most cutting-edge infrastructure tailored for Machine Learning (ML) operations. The two significant additions to this layer from AWS re:Invent 2023 are:

AWS Trainium 2

AWS proudly introduced Trainium2, a groundbreaking advancement designed to revolutionize the price-performance ratio for training models boasting hundreds of billions to trillions of parameters. With Trainium2, customers can anticipate up to four times faster training speeds compared to its predecessor, showcasing exceptional performance within EC2 UltraClusters, achieving an aggregate compute power of up to 65 exaflops. This monumental leap translates to training a 300 billion parameter LLM in a matter of weeks rather than months, highlighting Trainium2’s unparalleled efficiency.

Anthropic’s choice to leverage Trainium2 into future models for model training underscores its performance, scalability, and energy efficiency. The collaboration between AWS and Anthropic aims at continued innovation, further advancing Trainium and Inferentia technologies for cutting-edge AI model training and inference processes.

Amazon SageMaker HyperPod

Amazon SageMaker HyperPod streamlines the arduous tasks in constructing and optimizing machine learning (ML) infrastructure for training foundational models (FMs), resulting in a remarkable 40% reduction in training duration. Equipped with SageMaker’s distributed training libraries, HyperPod autonomously divides training workloads across thousands of accelerators, facilitating parallel processing for enhanced model performance.

This solution ensures uninterrupted FM training by routinely saving checkpoints. In case of hardware failure, HyperPod swiftly identifies, rectifies, or replaces faulty instances, seamlessly resuming training from the last checkpoint. This automated process eliminates customer intervention, enabling uninterrupted distributed training sessions lasting weeks or months without disruption.

Middle Layer of The Stack: Model as a Service

The middle layer of the AWS Generative AI stack presents a compelling business advantage by offering pre-built models like LLMs and FMs as a service. While some customers opt to construct or modify their own models, many seek to avoid the resource-intensive and time-consuming process. This layer provides a valuable solution, allowing businesses to access and leverage these sophisticated models without investing excessive resources, enabling them to focus on their core operations rather than model development.

Agents for Amazon Bedrock

Agents for Amazon Bedrock expedite generative AI app development by orchestrating multi-step tasks. Leveraging foundation models’ reasoning abilities to break down user requested tasks into multiple steps, Agents generate an orchestration plan based on developer instructions. This plan is further executed by invoking company APIs and retrieving knowledge bases through Retrieval Augmented Generation (RAG), creating a comprehensive response for end-users.

Guardrails for Amazon Bedrock

AWS prioritizes responsible development of generative AI by emphasizing on education, science, and assisting developers in integrating responsible AI throughout the product lifecycle. With Guardrails for Amazon Bedrock, consistent implementation of safety measures, alignment of user experiences with company policies can be assured. Guardrails helps to define and identify prohibited and harmful topics and stands as a barrier between the users and the application. This significantly strengthens content control apart from built in protections within FMs.

Apply guardrails universally across all Amazon Bedrock’s large language models (LLMs) and fine-tuned models, and Agents for Amazon Bedrock. This ensures uniformity in deploying preferences across applications, fostering innovation while managing user experiences according to specific requirements. Standardizing safety and privacy controls through Guardrails for Amazon Bedrock ensures the creation of responsible generative AI applications in line with ethical AI objectives.

Top Layer of The Stack: Applications

At the stack’s top layer are applications harnessing LLMs and other FMs, unlocking the power of generative AI in practical business scenarios. An impactful domain witnessing a transformative shift due to generative AI is coding.

Amazon Q

Amazon Q, an AWS generative AI chatbot, serves diverse users—from IT professionals, developers, and business analysts to contact center agents—facilitating data queries, content creation, and code generation.

Amazon Q can be accessed through the AWS Management Console, third-party apps, or documentation pages. Boasting as an workplace AI with 40+ built-in connectors, it seamlessly links with company resources, code, data, and systems for versatile querying. Additionally, it interfaces with AWS services like Amazon QuickSight, Amazon Connect, and AWS Supply Chain.

One can explore Q’s capabilities to unlock insights from stored data or streamline supply chain queries asking human language questions. For example, an user can ask “What’s the financial impact of delayed replenishment orders?” and Q will provide an in-depth analysis.

Users benefit from seamless integration with Quicksight through Q. Upon inquiring about sales by region, Q swiftly generates a chart ready for incorporation into dashboards, offering customizable options for users to tailor to their needs.

Q in Connect automatically tackles real-time customer queries. Whether it’s about rental car reservation fees or anything else, Q generates detailed responses empowering the contact center agents to assist customers swiftly and accurately improving customer satisfaction.

Conclusion

In conclusion, the recent product announcements at AWS re:Invent 2023 represent a significant step forward in the development and adoption of generative AI technology. By providing businesses with the tools and resources they need to leverage the power of generative AI, these announcements have the potential to transform the practices around information processing, querying, storage and training foundational models and LLMs in the AWS cloud. While the full impact of these announcements remains to be seen, it is clear that generative AI is poised to play a major role in shaping the future of how businesses leverage cloud.